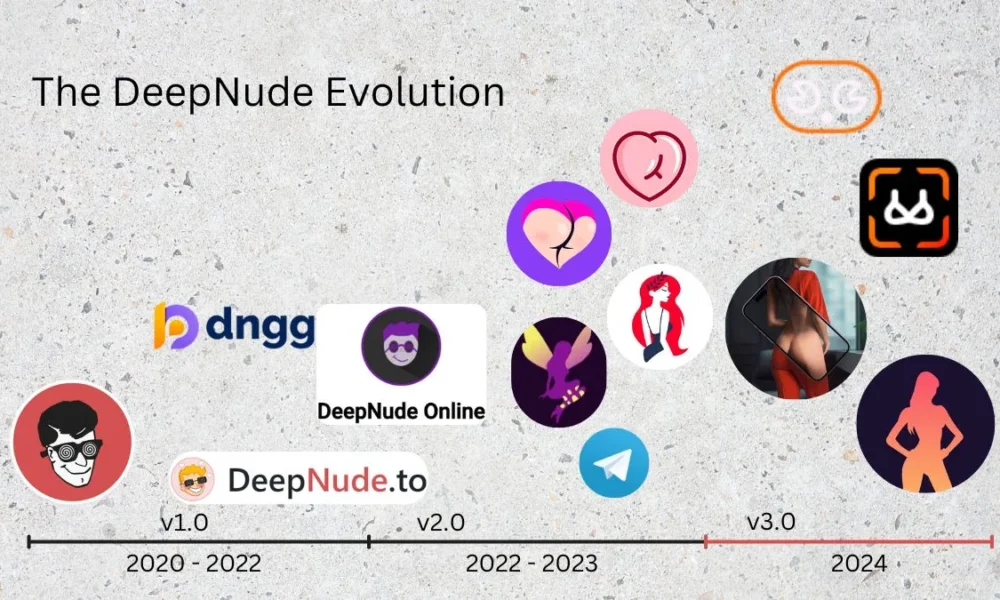

The rapid evolution of artificial intelligence continues to blur the line between reality and digital manipulation, with tools like “Shirt Up Video”. This emerging AI feature, currently exclusive to two Telegram bots, uses deepfake technology to generate short videos where clothed models appear to undress. Here’s what users need to know about its mechanics, risks, and the serious legal consequences of misuse.

Try Undress Shirt Up Now

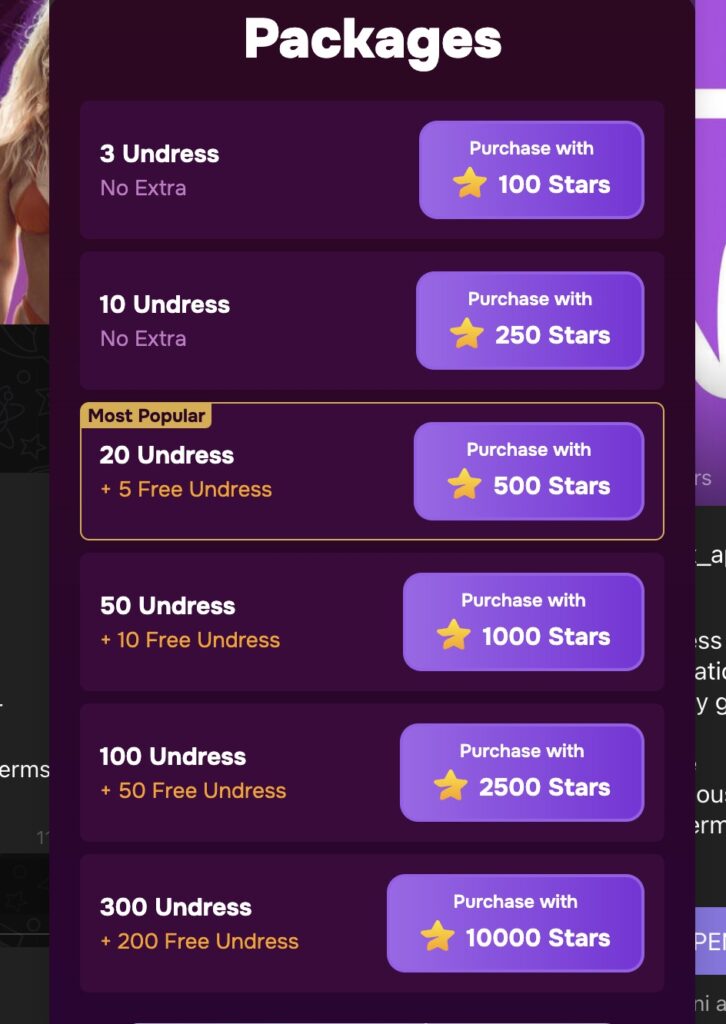

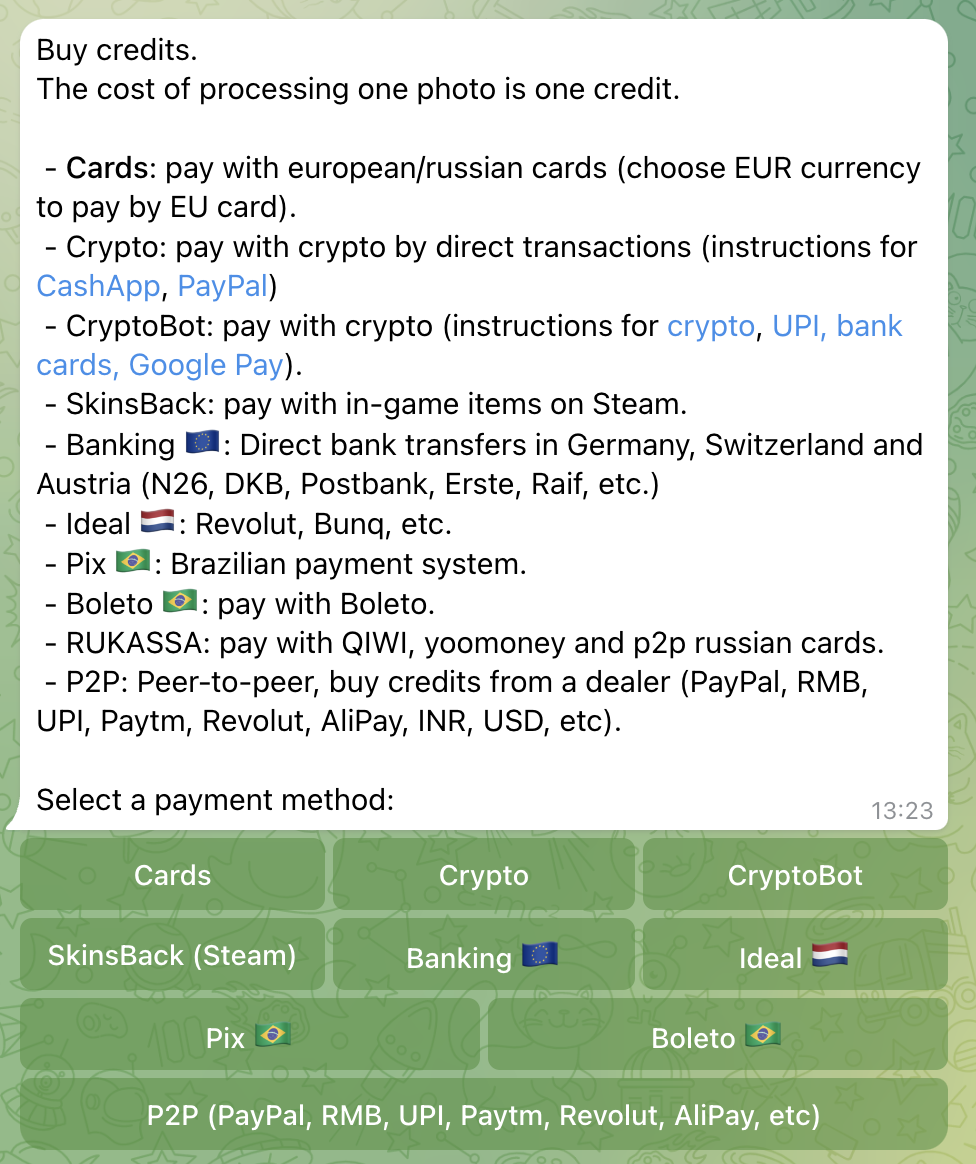

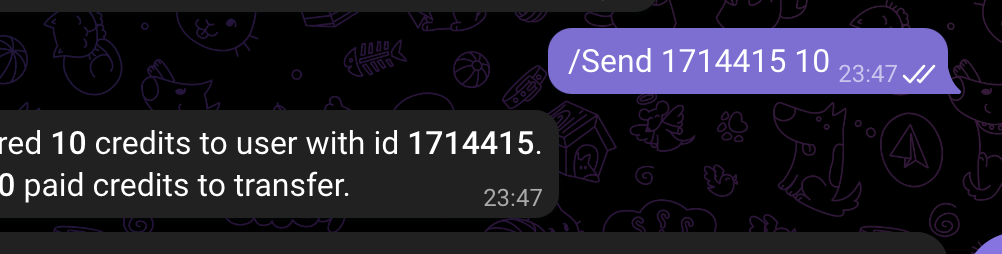

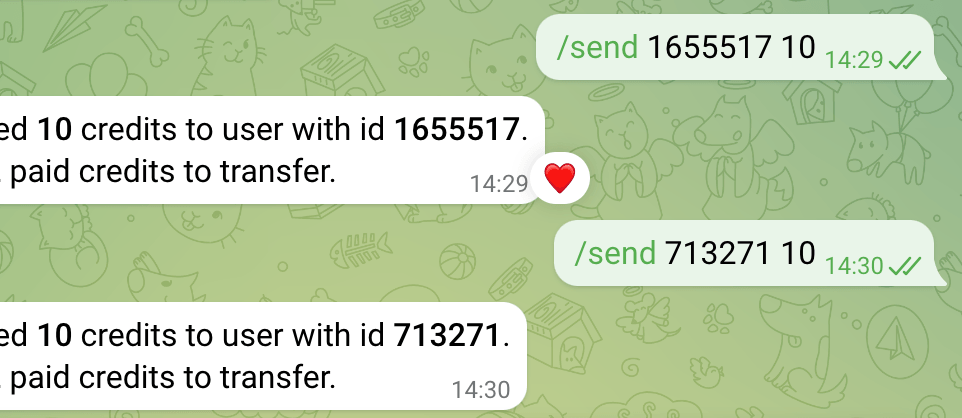

- ShirtUp Video Bot – First Video Undress Tool on the Market. Quality: 4.7

- Free 1 Try

- Price from 2 USDT for 2 undress.

- Options: Shirt Up / Shirt Up v.2 / BlowJ0b / Lick Pen1s

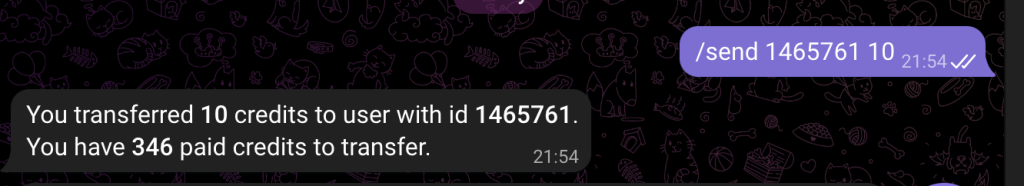

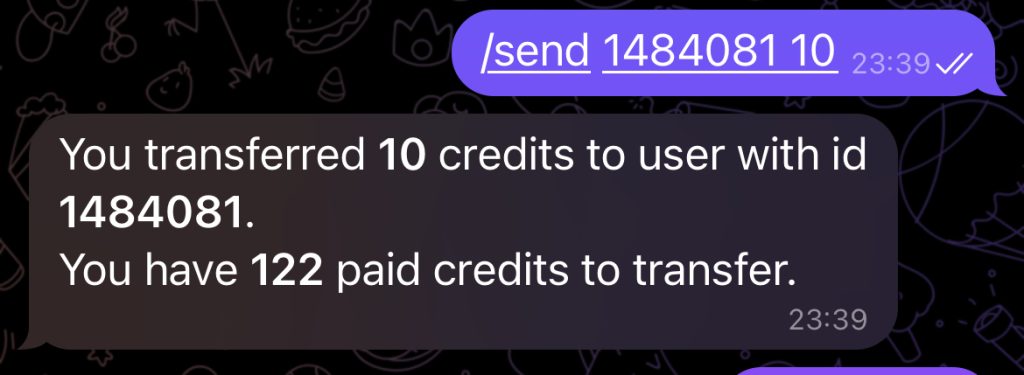

- @OKbra Bot – Nude bot with Shirt Lift Options. Quality: 4.9

Free 1 TryNo Free- Price from 15 USDT for 10 videos.

- Options: Shirt Lift / Ahegao Face / Lick C0ck

Each of these applications generates a video for 2 minutes. There is no watermark. The final video cuts the photo and shows the model above the waist.

What Is the “Shirt Up Video” AI Feature?

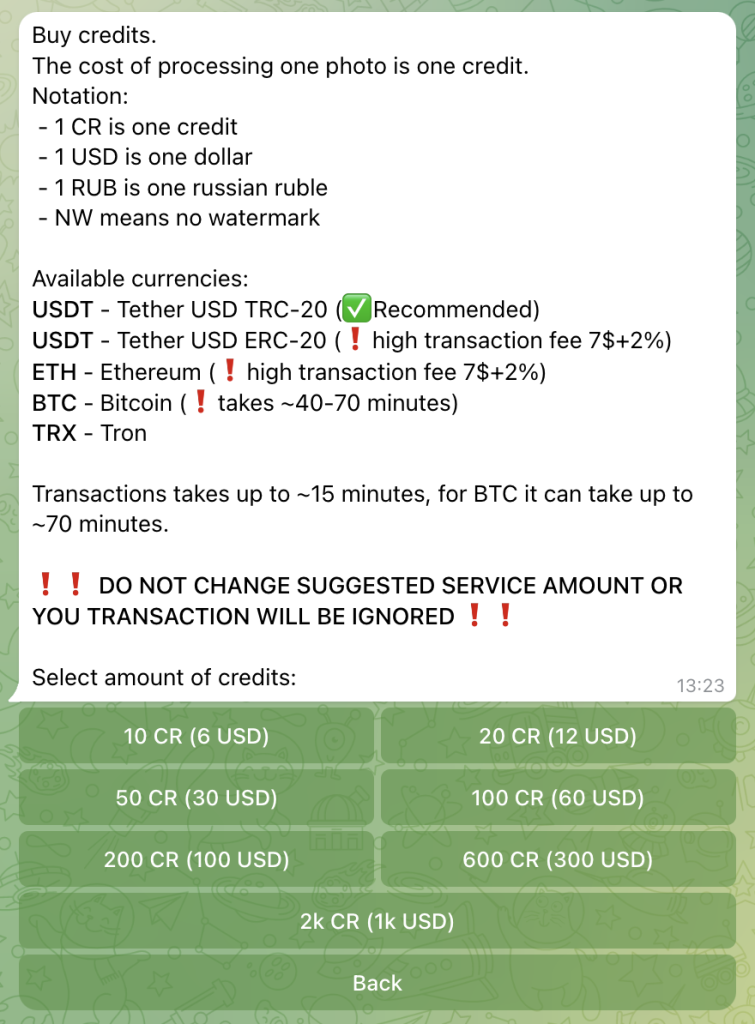

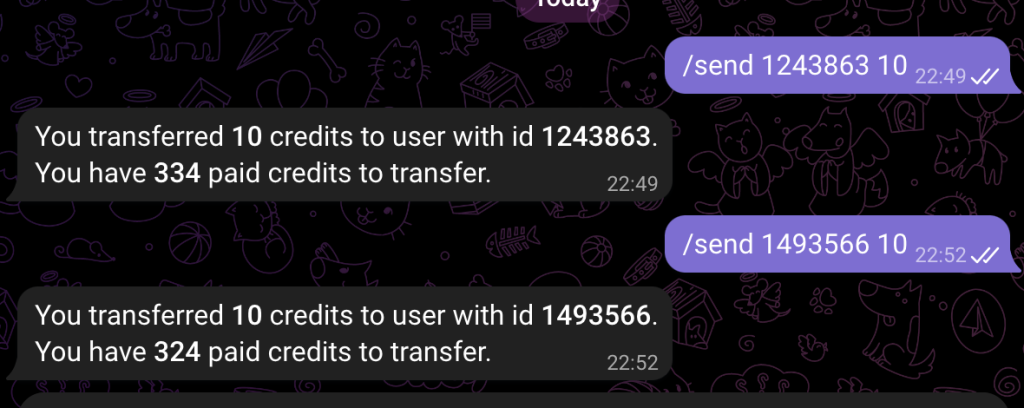

The “Shirt Up Video” tool leverages advanced deep learning algorithms to create hyper-realistic, 1–3-second MP4 clips. Starting with a single photo uploaded by a user, the AI generates a sequence of frames that simulate the model lifting her shirt to expose her breasts. The process begins with the original image and incrementally alters each subsequent frame, culminating in a fabricated nude reveal.

Can I see examples of generated videos?

There are very limited free attempts at video generation, but I’ll give you a few examples from each tool here.

This technology relies on generative adversarial networks (GANs) trained on vast datasets of human anatomy and movement. While the result is technically impressive, it raises alarming questions about consent, privacy, and digital exploitation.

Another example:

Another test Shirt Up:

Ahegao Face Video by OkBra Bot Example

This option doesn’t undress the girl, but creates an animation in which she sticks out her tongue and rollup eyes like in anime.

How Does It Work? A Technical Breakdown

- Image Upload: Users submit a photo of a clothed individual (often sourced without permission).

- Frame Interpolation: The AI analyzes body posture, fabric textures, and lighting to predict how clothing would move during the “undressing” motion.

- Deepfake Rendering: Each frame is altered to expose more skin, with the final frame showing fully generated nudity.

- Video Output: The seamless sequence is compiled into an MP4 file, creating the illusion of a natural movement.

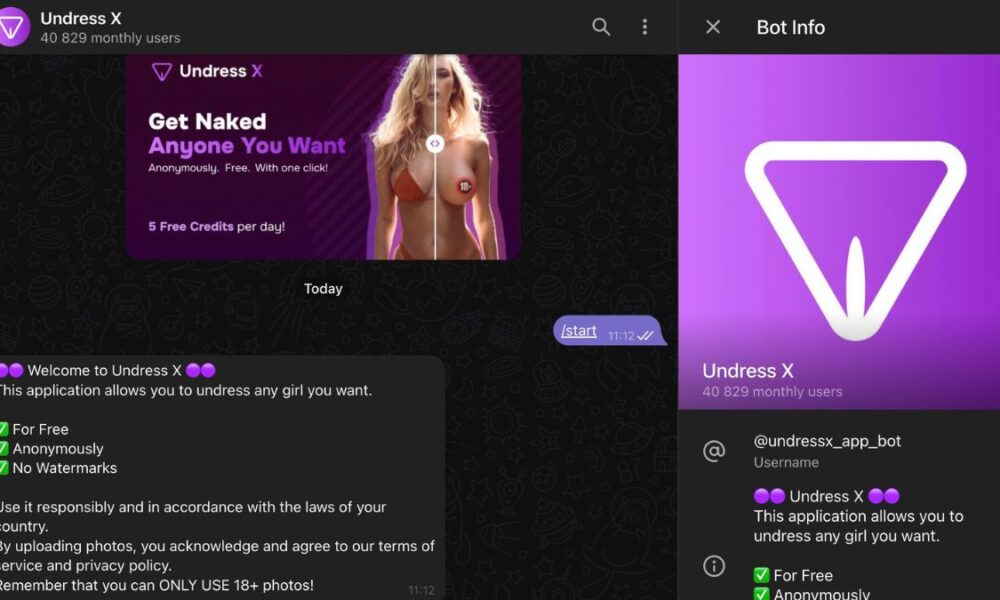

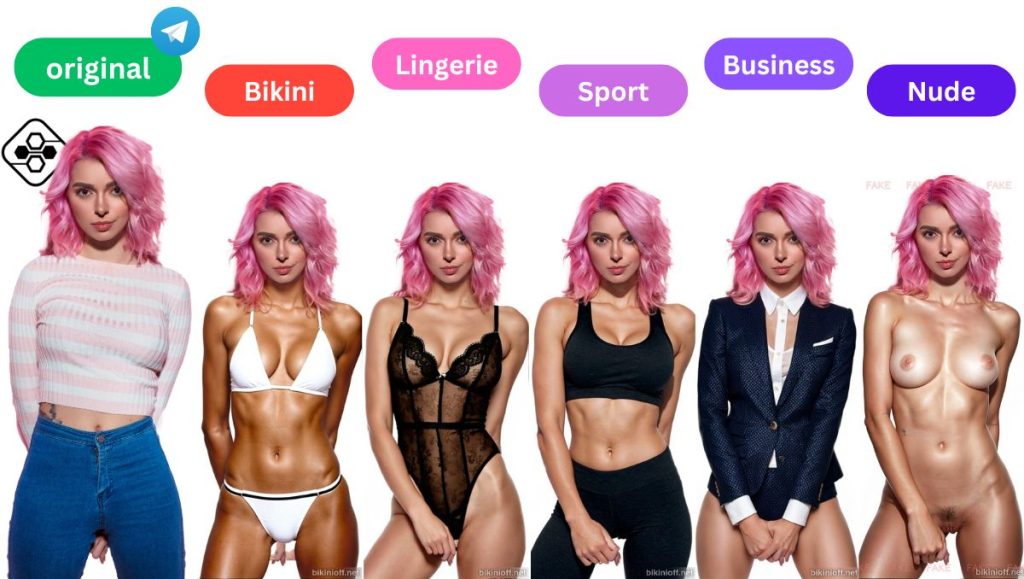

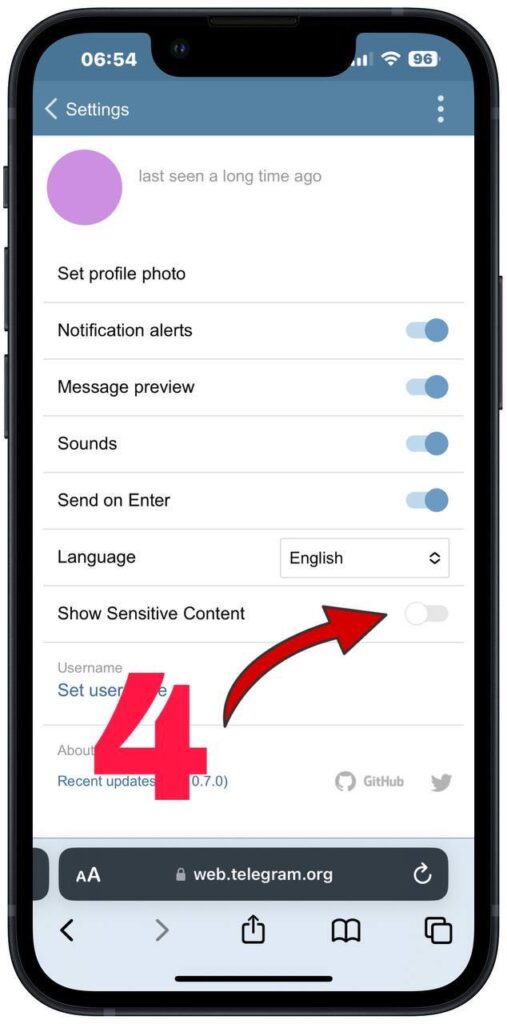

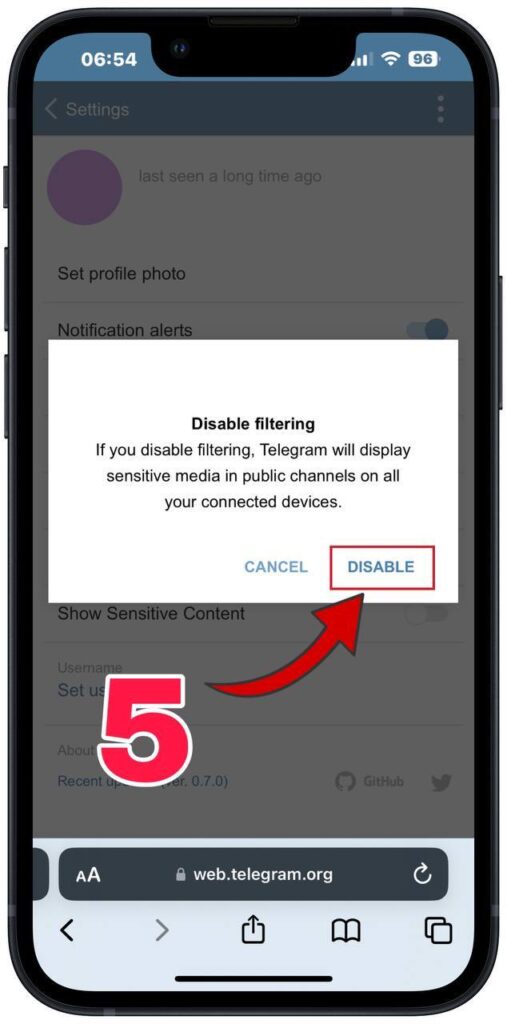

The tool’s accessibility via Telegram bots—platforms known for lax moderation—means it operates in legal gray zones, far removed from mainstream AI services like ChatGPT or DALL-E.

Ethical and Legal Red Flags

While AI innovation is transformative, the “Shirt Up Video” feature exemplifies technology outpacing regulation. Here’s why its use is fraught with danger:

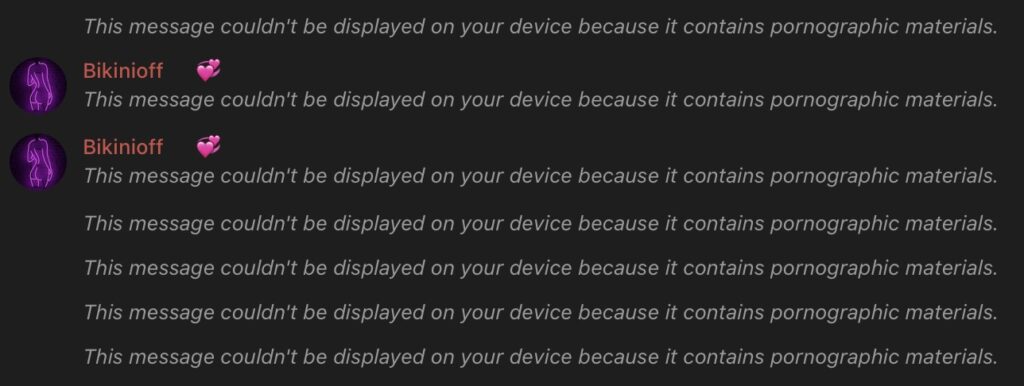

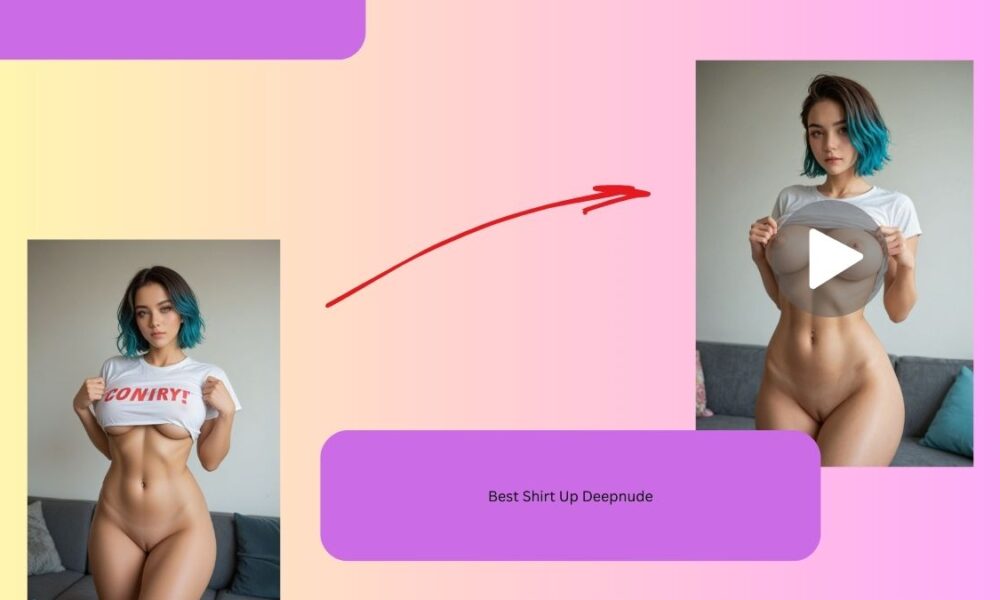

- Non-Consensual Content: Most victims are unaware their images are being manipulated, violating their privacy and dignity.

- Deepfake Pornography Laws: Countries like the U.S. (under the Deepfake Report Act), UK, and EU nations criminalize non-consensual deepfake porn. Perpetrators face fines, lawsuits, or imprisonment.

- Reputational Harm: Misused videos can destroy personal and professional lives, fueling harassment or blackmail.

Legal expert Dr. Elena Torres warns: “Creating or distributing deepfake nudity without consent isn’t just unethical—it’s illegal. Jurisdictions worldwide are tightening penalties, treating it as a form of sexual abuse.”

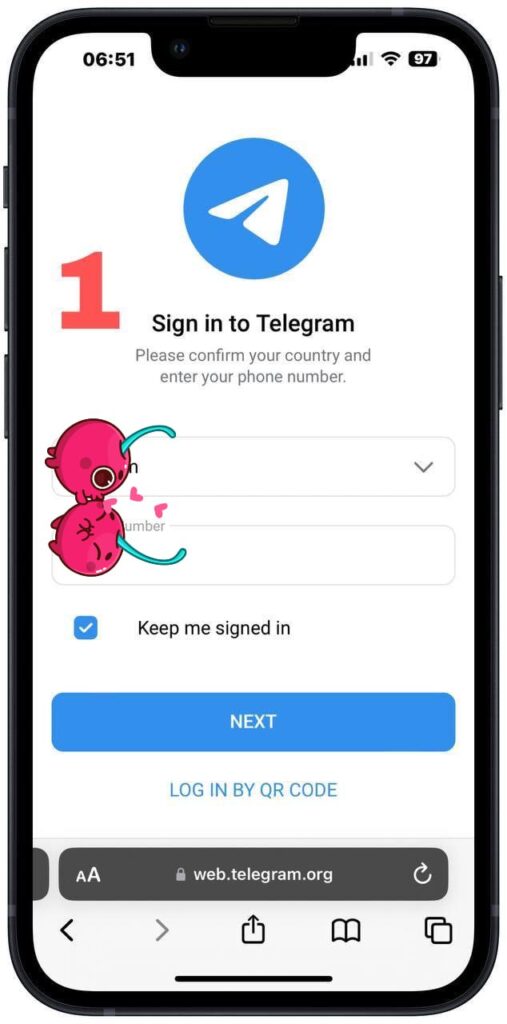

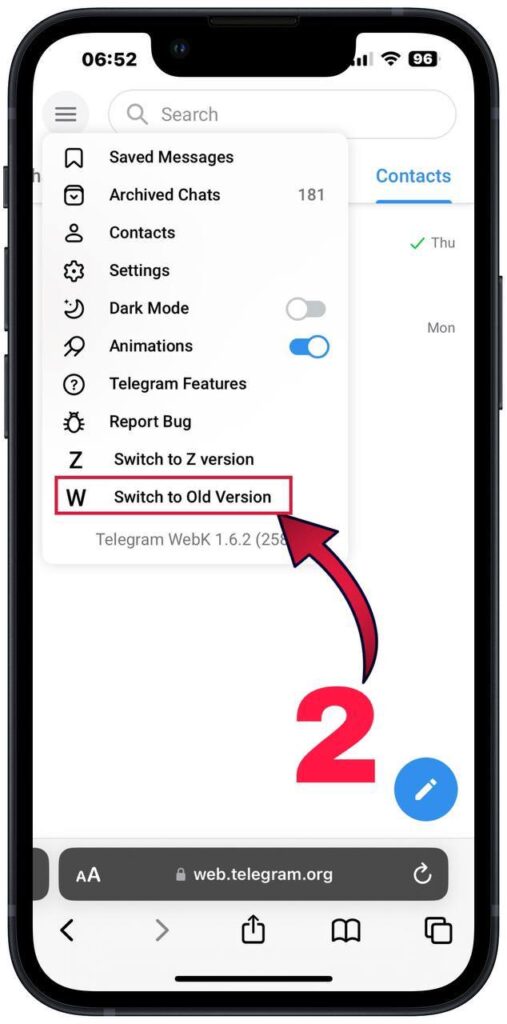

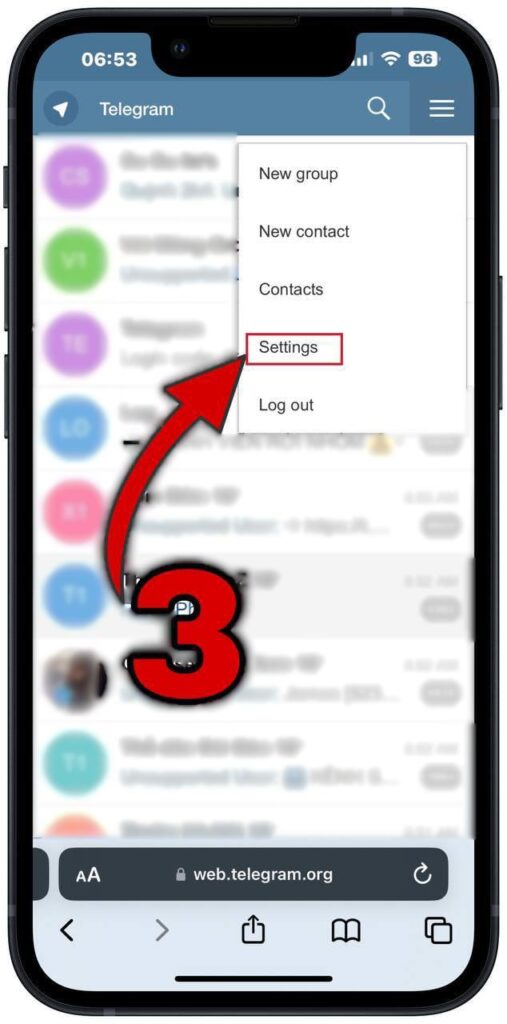

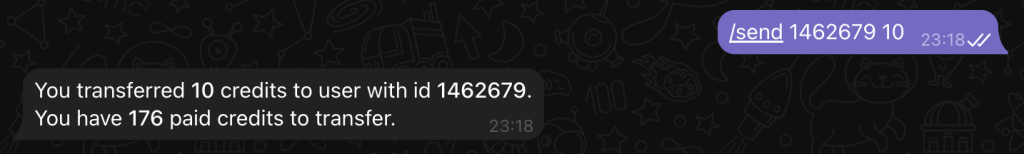

The Telegram Bot Dilemma: A Haven for Unregulated AI

The “Shirt Up Video” tool is currently confined to two Telegram bots, likely due to the platform’s encrypted, anonymous nature. However, this secrecy doesn’t shield users from accountability. Law enforcement agencies increasingly collaborate with tech firms to trace illicit deepfake activity, even on decentralized platforms.

Protecting Yourself and Others

- Avoid Engagement: Steer clear of tools promoting non-consensual content.

- Report Abuse: Flag Telegram bots or channels hosting such features.

- Legal Recourse: Victims can pursue takedowns under copyright law (if they own the original image) or privacy statutes.

AI ethicist Mark Chen notes: “Technology isn’t inherently harmful—it’s human intent that defines its impact. Prioritize consent, or risk becoming complicit in exploitation.”

Conclusion: Innovation Must Not Eclipse Morality

The “Shirt Up Video” AI tool underscores a critical juncture in tech ethics. While its technical prowess fascinates, its potential for harm is undeniable. As lawmakers scramble to curb deepfake abuse, users must ask: Is momentary curiosity worth enabling trauma—or a criminal record?

For now, this feature remains a cautionary tale. Society’s response will determine whether AI evolves as a force for creativity or becomes a weapon of exploitation.

![Best Nude Bots collection here [Free & Paid]](https://nudify.info/wp-content/uploads/2023/11/telegram-undress-bots-1000x600.jpg)

![How to Use DeepFake Telegram Bots [Tested 3 bots]](https://i.ytimg.com/vi/HxxN9KFDq7I/hqdefault.jpg)